Upcoming Webinars

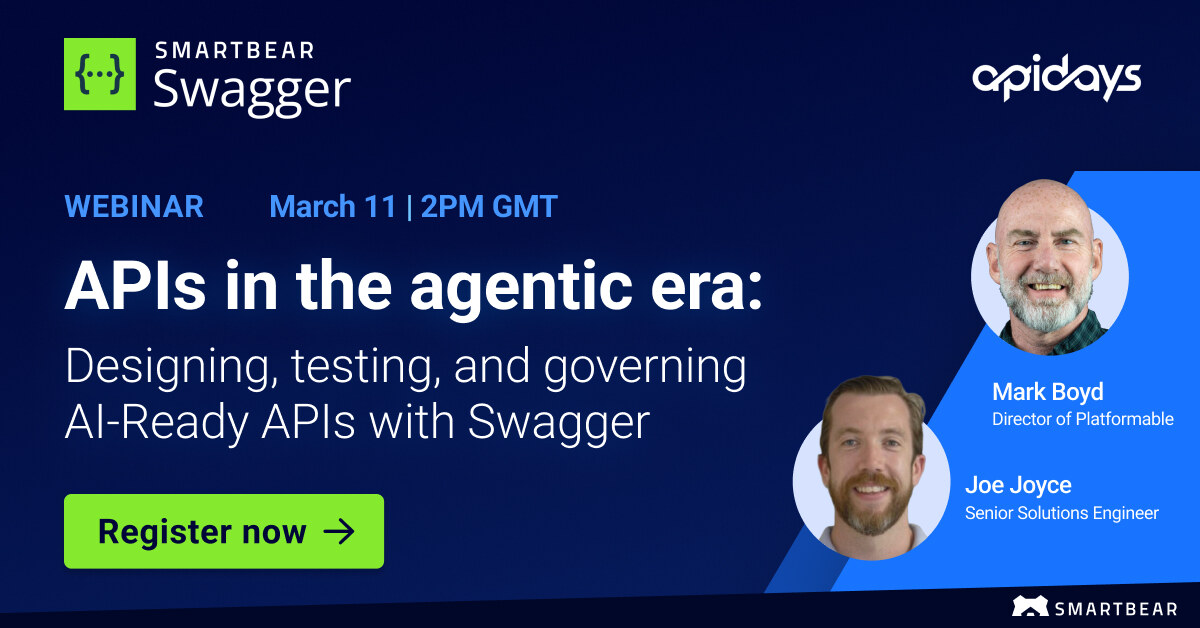

APIs in the Agentic Era: Designing, Testing, and Governing AI-Ready APIs with Swagger

Delivered in partnership with API Days, this webinar explores how AI introduces speed and unpredictability into software systems —and why that makes API quality and governance more critical than ever. The session introduces the 2025 AI-Enabled API Lifecycle Whitepaper and shows how teams can maintain control and quality while adopting AI-driven workflows. We’ll start with

March 11, 2026

WEBINAR

NISC Modernizes API Management with Swagger and AWS

Overview National Information Solutions Cooperative (NISC) is a member-owned technology company serving more than 1,000 rural energy and communications companies across the U.S., American Samoa, Palau, and Canada. NISC provides advanced, integrated IT solutions for consumer and subscriber billing, accounting, engineering, and operations, along with a wide range of other leading technologies. As its software

CASE STUDY

Inside the SmartBear Roadmap: Delivering Application Integrity Across the SDLC

As software teams move faster across APIs, testing, and observability, keeping application integrity intact is harder than ever. Join SmartBear product leaders for a Now / Next / Later look at how we’re evolving our platform to help teams build, test, and operate software with confidence. What you’ll get from this session: Get a clear view of where SmartBear is headed and how

April 8, 2026

WEBINAR

How to Build Automation-Ready API Tests with ReadyAPI

The main challenge of manual API testing is its lack of scalability, as it relies on labor-intensive, human effort that becomes increasingly slow and prone to error as the number of endpoints and required regression cycles grow. The solution? Automation. For automated API testing to work efficiently, API tests must be automation-ready by design, with

30 minutes

WEBINAR

How to Build a Scalable Test Framework with TestComplete

As your test suite grows, it can be easy to hit a productivity ceiling before getting trapped in maintenance mode. Re-use, reliability, and maintainability decide whether automation scales or stalls. In this 30-minute, demo-only technical session, SmartBear Senior Solutions Engineer Mike Flaherty starts with a basic TestComplete project and refactors a standalone test into reusable

30 Minutes

WEBINAR

Ask Me Anything: How to build a completely scalable testing engine with Zephyr

2026 is the year for getting the most performance out of your tools. Zephyr is the only Jira-native solution that keeps teams moving faster – offering unbeatable architecture built to scale with native AI-powered automation for boosting coverage and quality. Join our product experts as we answer your most important questions and talk about how

60 minutes

WEBINAR

Show per page

Show per page