How do we do that? As you can imagine, that becomes the job of those designing, developing and testing the applications that connect the devices that will end up collecting all that big data. Data-driven programming is one of the most in-demand skillsets in this new data-driven world. This programming paradigm is not based on the usual sequential steps; instead it’s when program statements are used to describe the data that needs to be matched. It all happens with gathering, categorizing, filtering, transforming and aggregating the data which in turn reveals patterns and aggregates results based on those patterns.

As we begin to consume this exponential amount of data, the challenge becomes to make sure that data is pure and in some areas—like distributed messaging systems for financial, governmental, and healthcare industries, to name a few—it is absolutely essential to make sure that no data is dropped along with way. That’s where software testers rise up as the big data heroes and heroines, not only making sure we are capturing and cataloging all kinds of data before release, but trying to make sure that data is processed smoothly throughout the lifecycle of the product.

In short, software testing is essential to the future of big data and the data-driven world.

What is a data-driven API?

When we talk about the Internet of Things or even just our smartphones, there’s no doubt that three words are driving those innovations—-the application programming interface or API. The API is driving the data from place to place, device to device and connecting it all in a sensible way. When it comes down to it, any type of API is and should be driven by data, by focusing on accessing data, regardless of business processes.

A major benefit of the data-driven API is that it prevents coupling between data sets and functionalities.

A data-driven API or one in a series of data-driven APIs can essentially act autonomously. By creating and using these data-driven APIs, you are able to implement each of them separately, which means you can build, test and deploy more efficiently with greater agility.

Another benefit of the data-driven API is that it can then use that data to draw conclusions and act or react. A great example of this in the IoT space is the API behind the smart thermometer, which accepts inputs of data like temperature and humidity level, and adjusts based on the combination of these variables and the fixed consumer preferences. But where the data-driven API will really shine is when we soon have a connected ecosystem of different devices connecting and distributing different data streams from various API providers, which in turn create hundreds if not thousands of observer-subject pairs. A well-made, well-automated, well-tested data-driven API will be key to the success of our connected world.

What is data-driven testing?

Data-driven testing is a testing methodology where the test case data is separated from the test case logic. You create a series of test scripts that see the same test steps performed repeatedly in the same order, but with a variation of data. In data-driven testing, a data source, like a spreadsheet or other table, is used for the input values and typically the testing environment is not hard-coded.

As an extension of your automated test cases, in data-driven testing, data external to your functional tests is loaded and used to extend these cases.

An automatic data-driven test is usually made up of these components:

- a data source: including spreadsheets, JDBC sources, file directories, data pools, ODBC sources, or a text file separated by commas

- test steps: using the data in other steps, like requests, assertions, XPath queries, or scripts

- data loop: continuing the test with the next row of data

That last component is what makes data-driven testing interesting because it comes with a reusable test logic which reduces maintenance and improves coverage.

An example of a data-driven API Test

What is included in a data-driven test?

- a data set

- testing logic (request / response model)

- the expected output

- the actual output

Separating data from the test commands, testers often use a table to run the test. The table makes it easy for testers to compare the expected output with the actual output.

Data for these automated tests are kept in storages that are logically set up based on your sequence. If you want to change it, you just modify the data in your data source. Typically these storages then contain both input data and verifying values, which allow you to specify verification data for each portion of test data.

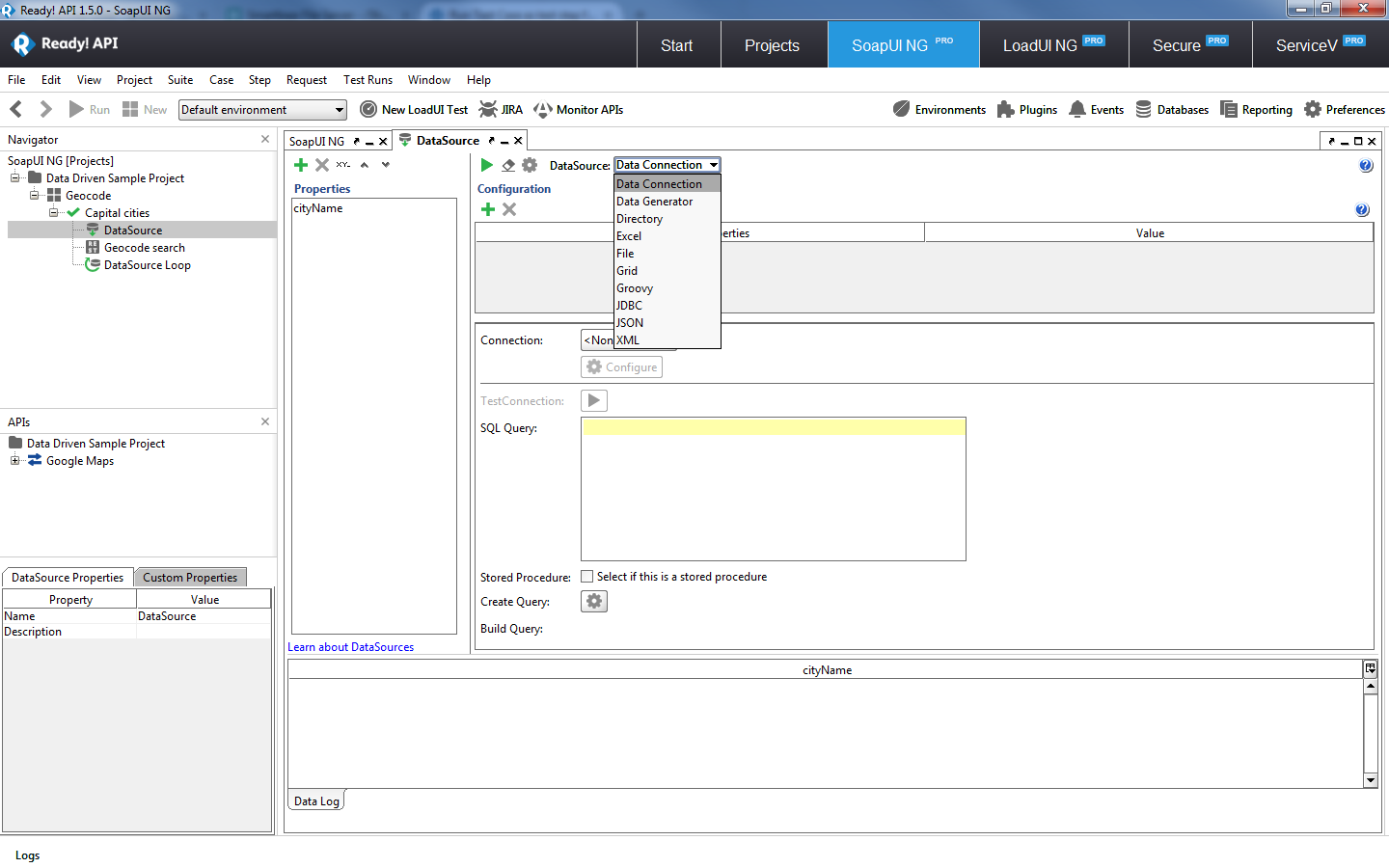

Configuring different types of data inputs for executing a data-driven test

In data-driven testing automation, data files are used to test how an application or software responds to inputs differently. These automated tests play back a programmed sequence of user actions.

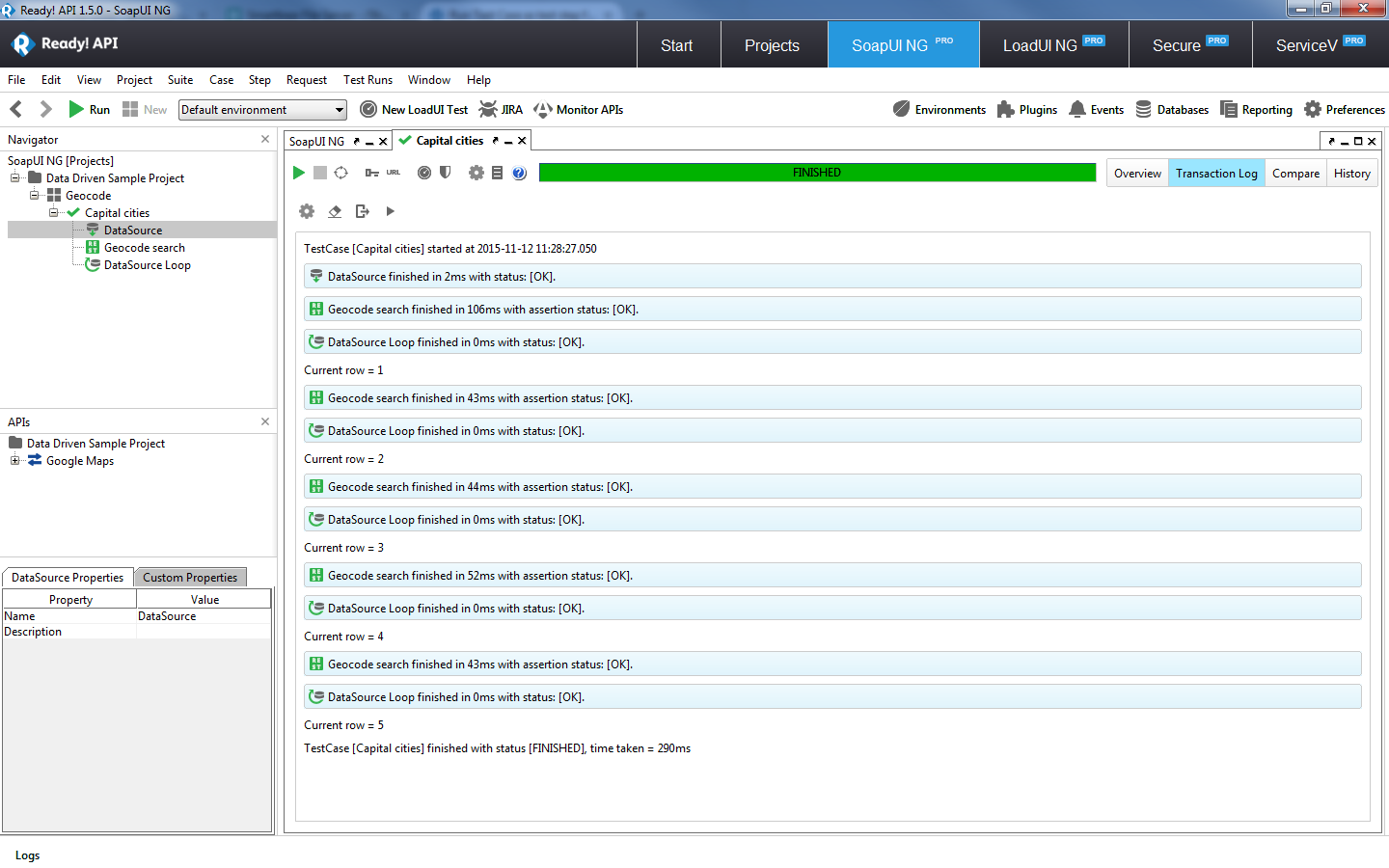

What is the sequence of a data-driven test?

- A portion of data is retrieved from storage.

- The data is injected into API requests based on tokenization rules.

- The results are verified.

- The test is repeated but with the next row of input data.

Depending on how you’ve set up your test, this pattern will continue either until the test finishes the entire store of data or until it encounters an error.

3 Example data-driven test results

What is data-driven testing commonly used for?

Data-driven testing is usually applied to applications that have a fixed set of actions that can be performed, but that can have a lot of variations and permutations. Common use cases are customer order forms and simple inventory forms, where you can get items, add items and log items. You add the same column headers that were in your data sheet as Properties in your automated testing tool. Then you run a data-driven test to make sure these items are actually where they should be. In these situations, you record a single automated test, and then simply add values for each field. Then when you want to repeat the test with new kinds of data, you can enter them into the data fields. You automatically run the test each time you introduce new data because repeated tests help verify accuracy and functionality.

How do you build a data-driven API test?

- Make a list of the fixed sets of actions that your application should be performing against your API.

- Gather the data you want to test, putting it inside a table or spreadsheet, commonly referred to as “storage”.

- Create a test logic with a fixed set of test data.

- Replace the fixed test data with a set of variable data.

- Assign the value from the data storage to your variables.

Manually performing data-driven testing doesn’t take a heavy skillset but can require initial attention to detail. Automating data-driven testing is a way to verify accuracy of data just as well, but in a fraction of the time.

What are the Benefits of Data-Driven Testing?

One benefit of data-driven testing is that this kind of test can be designed while the application is still being developed. And, as referred to at the start, the separation of the test case and the test data means that the test cases or test scripts don’t affect the test data, allowing you can make changes and updates to them.

Of course, it’s also very compelling that these tests are repeatable and reusable, while still needing significantly less code to perform much fewer test cases. A single control script can execute all of the tests.

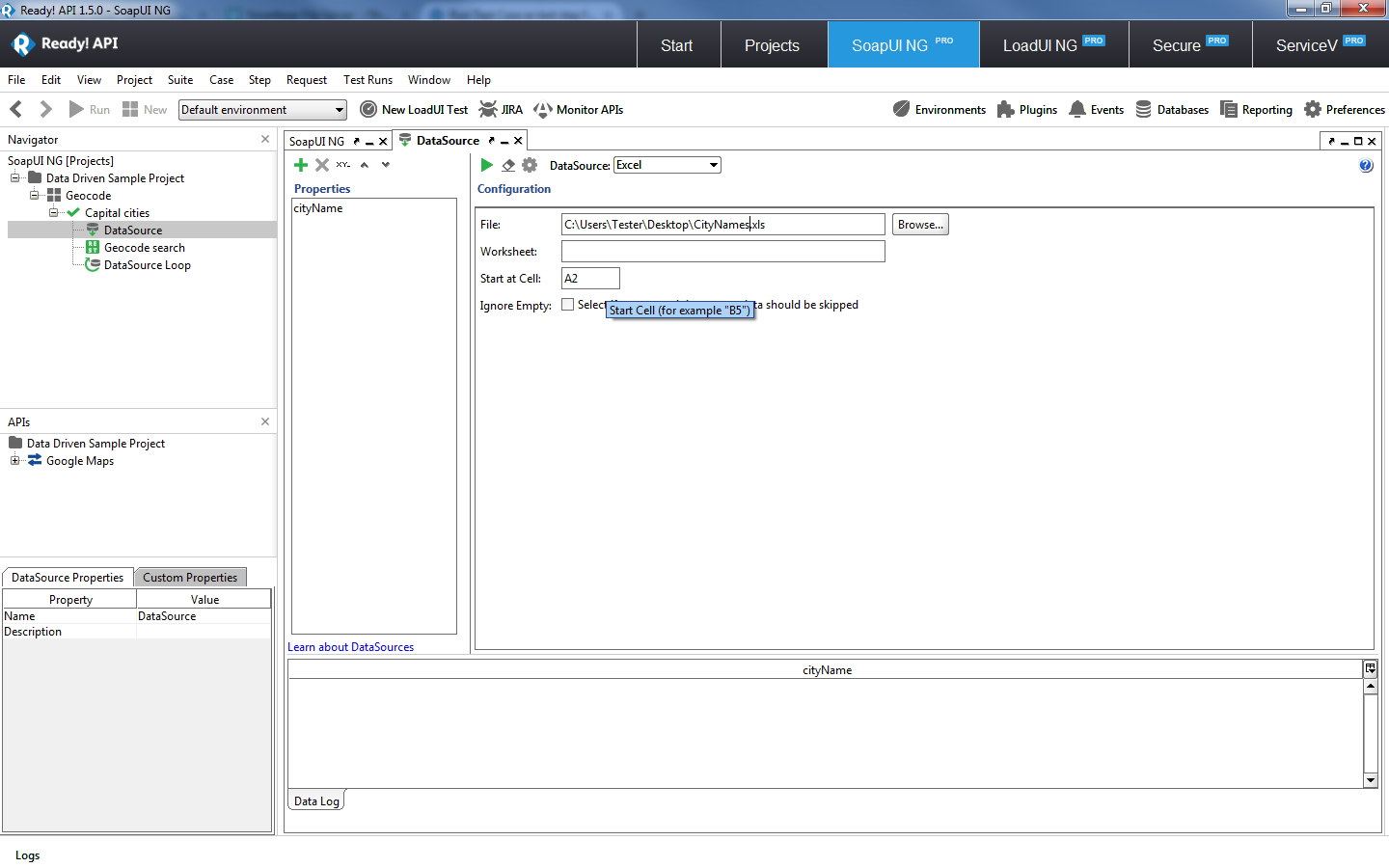

Tip: When you are importing from a data source like an Excel spreadsheet, make sure to exclude the Header as data itself, by directing the testing automation tool to only begin with the second row.

4 Specifying the starting cell for an Excel data source.

Like a proper scientific experiment, the thing that makes data-driven testing valuable is that anything that acts as a “variability,” which comes with the ability of changing, is separated out from the test, and treated as an external asset. This means the script is solely focusing on the behavior of the app when working with certain data values.

Be sure to research which testing automation tool and, in particular, which plan is right for your set of data and your efficiency needs. For some, like SoapUI, for a freemium version you have to manually create your XPath Assertions, while the Pro version comes with a wizard that generates them for you. Then, when something fails in the test, the incorrect input is highlighted or colored differently, so you can recognize any errors at a glance. You can also decide if you want the entire test to stop at failure or to run through and then simply mark the failures throughout the whole test run.

Further Resources