A Day in the Life of an SDET: How to Use Automation to Improve Your Workflow

Software testers, or SDETs, have several responsibilities in today's QA-driven software industry. In addition to collaborating with developers to plan and execute tests, they are often responsible for setting up and maintaining continuous integration (CI) processes, analyzing test results, and generating reports for stakeholders.

In this article, you'll learn about the evolution of the SDET role and how automation and artificial intelligence (AI) can help improve efficiency.

What's a Test Engineer?

Manual tests require relatively few technical skills. In the past, QA testers would receive a checklist of actions, complete the steps, and record unexpected results in a bug tracker. Manual tests don't scale well enough for many of today's software projects, prompting automated tests to become the new standard.

Software Development Engineers in Test (SDETs) emerged to fill the gap between manual QA testers and developers. While manual QA testers focus on black box application tests (e.g., trying out an application), SDETs focus on writing automated tests using both white box (e.g., placing tests inside the application) and black box testing techniques.

Successful SDETs require technical expertise and a broad range of other skill sets; they must possess project management, customer service, and exceptional communication skills. In today's environment, many SDETs have in-depth coding and DevOps knowledge, making them an essential part of any team.

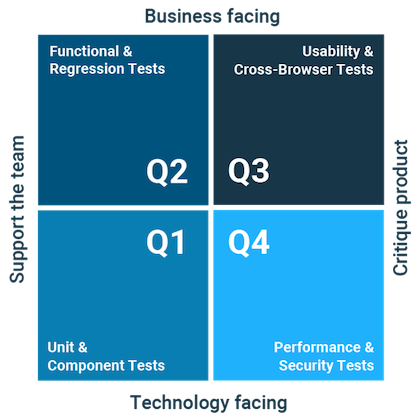

What Tests Do SDETs Run?

As software tests shift left, they have become more complex and integrated with the software development lifecycle. SDETs typically write tests before, or during, the development process using various tests and methodologies. This ensures functionality, performance, security, and usability – and, ultimately, an exceptional user experience.

Some of the most common tests include:

- Functional Tests – Ensures the software meets functional requirements. While developers may write unit tests, SDETs may be responsible for integration-level tests.

- Visual Tests – Verifys user interface elements appear and function correctly across devices, browsers, and screen resolutions using manual or automated methods.

- Performance Tests – Validates an application's stability and responsiveness under different conditions by simulating actual visits or using protocol-level requests.

For each of these tests, SDETs may be responsible for multiple tasks spanning design, creation, execution, and debugging. This is in addition to addressing any issues that arise during the testing process. While automation helps improve the overall test scalability, it adds new technical and maintenance requirements for test engineers.

Some tasks SDETs are responsible for include:

- Planning – They work with developers, product managers, and other stakeholders to understand the requirements, including test environments, test data, and other elements.

- Scripting – They develop test cases and scenarios, incorporate input data, and set validation criteria in test scripts in various languages and frameworks.

- Automation – They may implement and maintain automated test frameworks and tools, including continuous integration (CI) portions of the CI/CD pipeline.

- Validation – They must validate the results of some tests where the result isn't definable in code. For instance, UI tests may require a spot check to differentiate between a legitimate failure or a false negative before developers can diagnose it.

- Debugging – They collaborate with developers to isolate, reproduce, and diagnose the root cause of bugs and may also verify what resolutions fix the issues.

- Documentation – They may need to document test strategies, circulate test results, or generate reports for stakeholders.

These tasks aren't an exhaustive list of what SDETs do. They also keep up to date with the latest methodologies, tools, and best practices while ensuring test suites meet the increasingly demanding quality requirements.

Challenges of Automating Manual Tests

Some tests are more difficult to automate than others. Take UI tests, for example. An automated script can check a web app's DOM for the presence of a button using its ID, but a CSS error may result in the button "appearing" off-screen. As a result, these tests are often manual endeavors that require a lot of time to complete.

At the same time, there's a high cost for trying to automate some of these manual tasks. For instance, automating a visual test using pixel-by-pixel screenshot comparisons can result in several false positives, leading to wasted time and effort. Or trying to improve coverage with too many assertions can lead to flaky tests.

How Artificial Intelligence Can Help

The rise of AI and machine learning algorithms addresses these problems in novel ways. For example, new large language models (LLMs) can help with everything from writing test scripts to debugging to documenting results. And these efforts can free up SDETs to focus on maximizing test coverage at a higher level. New AI-powered tools can also help improve tests by making them more accurate and less flaky, saving time.

For example, consider the following Cypress visual test script:

describe('UI looks correct', () => {

...

it('tests the header shows correctly', () => {

cy.get('header')

.should('be.visible')

.should('have.css', 'background-color', '#333333')

.should('have.css', 'height', '50px')

cy.get('.logo)

.should('be.visible')

.should('have.css', 'width', '100px')

.should('have.css', 'height', 'auto')

}

...

}

Writing tests like these can take a lot of time. And worse, each tweak to the visual design could require extensive updates to the tests, making them extremely difficult to maintain. And then, if a single assertion fails, it's challenging to know precisely what problem occurred to diagnose a fix since we only know the header had a problem!

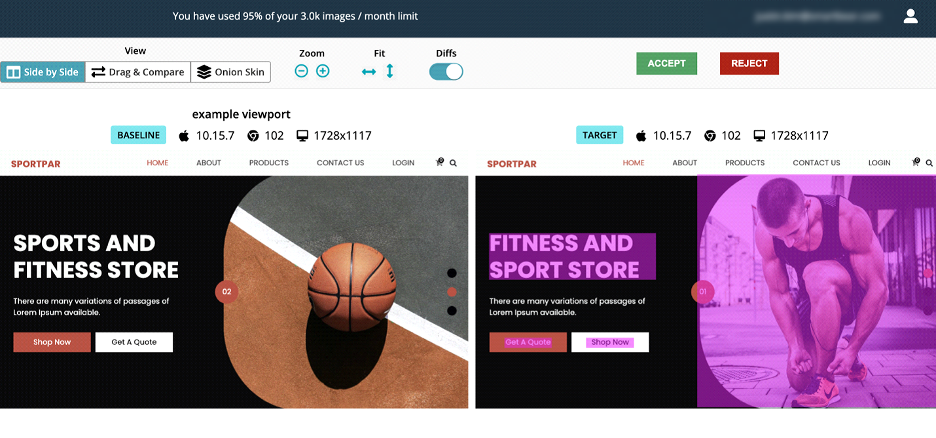

VisualTest solves these problems with AI. After installing the NPM package and initializing the driver, you can insert a single line of code to take a screenshot within your tests: `cy.sbvtCapture('example full page')`. And then, AI algorithms automatically determine when the UI experiences a meaningful change and alert testers.

VisualTest automatically highlights changes for SDETs to approve without relying on flaky or difficult-to-maintain test scripts. Source: SmartBear

VisualTest automatically highlights changes for SDETs to approve without relying on flaky or difficult-to-maintain test scripts. Source: SmartBear

The value of these AI-powered tools can be significant when factoring in the time it takes to write and maintain visual UI tests. Not to mention the wasted time when flaky tests produce false positives or results requiring extensive debugging. By using tools like VisualTest instead, you benefit from less time building and maintaining and more accurate results!

The Bottom Line

Software tests have evolved from manual tests just before deployment to automated tests written before any code. While these new approaches help improve quality and coverage, they introduce new challenges for SDETs, making it a challenge to scale. But fortunately, AI can help simplify complex tests and ship projects faster.

If you want to see how VisualTest can help automate and streamline your UI tests